In a significant move towards regulating artificial intelligence (AI), the European Union’s AI Act (EU AI Act) was published in the Official Journal of the European Union on July 12, 2024. As the first comprehensive legislation of its kind, the act aims to ensure AI systems within the EU are safe, trustworthy, and don’t violate fundamental rights. Although full compliance is not required until August 2, 2026, for organizations doing business in the EU, this new regulation introduces stringent requirements and a layer of complexity they may not be used to. Here’s what you need to know to navigate this new regulatory landscape effectively.

Key Elements of Compliance

A cornerstone of the EU AI Act is classifying the risk associated with each AI system. This requires rigorous new processes for evaluating AI systems pre-deployment, blocking prohibited systems, and implementing continuous monitoring. The act’s risk-based classifications include:

- Prohibited AI Systems: AI systems posing an unacceptable risk, such as social scoring by governments and certain biometric surveillance, will be banned.

- High-Risk AI Systems: These include AI systems used in critical infrastructures, education, employment, and essential private services like credit scoring. High-risk AI systems require continuous monitoring, including risk management, data governance, transparency, and human oversight.

- Limited and Minimal Risk Systems: Subject to lighter obligations, mainly focusing on transparency.

In addition, the act establishes the AI Office within the European Commission to enforce the rules. This office is supported by a scientific panel and AI board from various member states. With this, the EU AI Act also introduces the concept of AI regulatory sandboxes for AI testing in a controlled environment. Proper testing before deploying AI systems ensures AI systems do not infringe on fundamental rights and align with security best practices.

Timeline for Compliance

- August 1, 2024: The EU AI Act becomes part of the EU legal order.

- November 2, 2024: Member States must identify and disclose their designated authorities to enforce the act’s requirements.

- February 2, 2025: Chapters I and II become applicable. This includes but is not limited to, AI literacy, geographic scope, definitions, and provisions on prohibited AI practices.

- May 2, 2025: Policies and processes related to general-purpose AI models will be required.

- August 2, 2025: Additional requirements become applicable, including but not limited to notifying authorities, governance structures, and non-compliance.

- August 2, 2026: All provisions become applicable.

5 Factors for Success

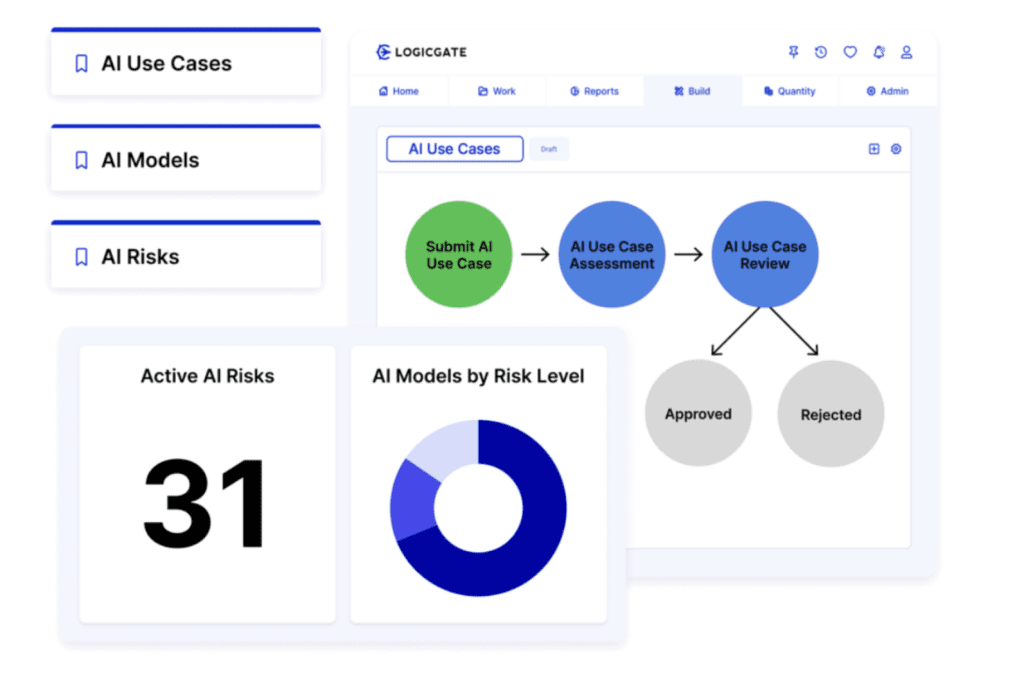

1- AI Governance

Establish a robust mechanism to document and evaluate each AI use case before deployment. This involves creating a centralized repository where all AI projects are submitted for review. The review process should assess the AI system's intended use, scope, stakeholders, and compliance with regulatory requirements. By doing so, organizations can ensure they are fully aware of the AI systems in operation and can manage them effectively to prevent non-compliance issues.

2- Cyber Risk Management

Conduct thorough risk assessments for each AI system, identifying potential threats and vulnerabilities. Automation can streamline these assessments, ensuring that risk mitigation strategies are promptly implemented. Regular monitoring and updating of these risk assessments are necessary to keep up with evolving threats and to ensure continuous compliance with the EU AI Act.

3- Controls Compliance

Develop a detailed compliance strategy that implements stringent controls and regular assessments to verify adherence to regulatory requirements. This strategy should outline the roles and responsibilities of different organizational stakeholders and establish clear procedures for maintaining compliance. Documentation and evidence of compliance efforts are crucial for demonstrating adherence during regulatory inspections or audits.

4- Policy and Procedure Management

Publish comprehensive AI policies that outline the ethical and operational standards for AI systems across your organization. These policies should be regularly reviewed and updated to reflect any changes in the regulatory environment. Automation can play a significant role in managing these policies, ensuring that all relevant stakeholders are aware of and adhere to them. Automated attestations can streamline the process of documenting compliance, making it easier to demonstrate adherence to the EU AI Act.

5- Third-Party Risk Management

Although not specifically identified in the act, to truly have a holistic view of risk, organizations need to understand AI systems used within their supply chain. This means conducting comprehensive third-party risk assessments to identify AI technologies employed by their suppliers. Effective third-party risk management involves continuous monitoring and evaluation of suppliers' compliance with AI regulations. Establishing clear communication channels and setting expectations for compliance with the AI Act can help mitigate risks associated with third-party AI systems. Afterall, you’re only as strong as your weakest vendor.

AI Governance in Risk Cloud

At LogicGate, we enable organizations to move fast to harness the power of AI while staying safe with holistic AI Governance. Adding the AI Governance Solution to your GRC program means you can:

- Streamline use case and model approval

- Holistically manage risk across the entirety of your organization

- Implement and assess controls

- Automate policy attestation

- Manage third-party risk

The EU AI Act marks a significant step towards regulating artificial intelligence, setting a global standard for AI governance. For companies, this means taking proactive steps to manage AI use cases, address cyber risks, manage third-party vulnerabilities, ensure compliance, and standardize policies and procedures.